As more than half of Australian office workers report using generative artificial intelligence (AI) for work, we’re starting to see this technology affect every part of society, from banking and finance through to weather forecasting, health and medicine.

Many people are now using AI tools like ChatGPT, Claude or Gemini to get advice, find information or summarise longer passages of text. But our recent research demonstrates how generative AI can be used for much more than this, returning results in different formats.

On the one hand, AI tools are neutral – they can be used for good or ill depending on one’s intent.

However, the models powering such tools can also suffer from biases based on how they were developed. AI tools, especially image generators, are also power hungry, ratcheting up the world’s energy usage.

And there are unresolved copyright claims surrounding AI-generated outputs, given the content used to train some of the models isn’t owned by the organisations developing the AI.

But ultimately, there’s no escaping generative AI. Learning more about what these tools can do will improve your digital literacy and help you understand their full impact, from benign to problematic.

Read more:

AI to Z: all the terms you need to know to keep up in the AI hype age

1. Imagining what lies beyond the frame

Adobe’s recently developed “generative expand” tool allows users to expand the canvas of their photos and have Photoshop “imagine” what is happening beyond the frame. Nine News infamously experimented with this tool for a broadcast featuring Victorian politician Georgie Purcell.

Here’s a video that shows how that tool works:

But it can also be used more innocently to extend the borders of a landscape or still-life image, for example. You might do this when trying to edit a square Instagram photo to fit a 4×6 inch photo frame.

2. Visualising the past or the future

Photography was only invented within the past 200 years, and camera-equipped smartphones within the last 25.

That leaves us with plenty of things that existed before cameras were common, yet we might want to visualise them. This could be for educational purposes, entertainment or self-reflection.

One example is the writings of historical figures, like architect Robert Russell, who conducted the first survey of what is now Melbourne in 1836. He wrote at the time:

The soil is in this country superior to any in the colony, we have a good grazing land, and a fine supply of water: a fine harbour, a Town on which much capital (I am afraid to say how much) has been expended, enterprising settlers and flocks and herds increasing in all directions, a climate well fitted for Englishmen, and events hastening forward the necessity for some scheme of extended emigration from which we shall soon feel the benefit.

We can feed this text from Russell’s letters into a text-to-image generator and see what the area may have looked like.

Midjourney image by T.J. Thomson

Conversely, we might want to look ahead and see if AI can help us visualise what is to come.

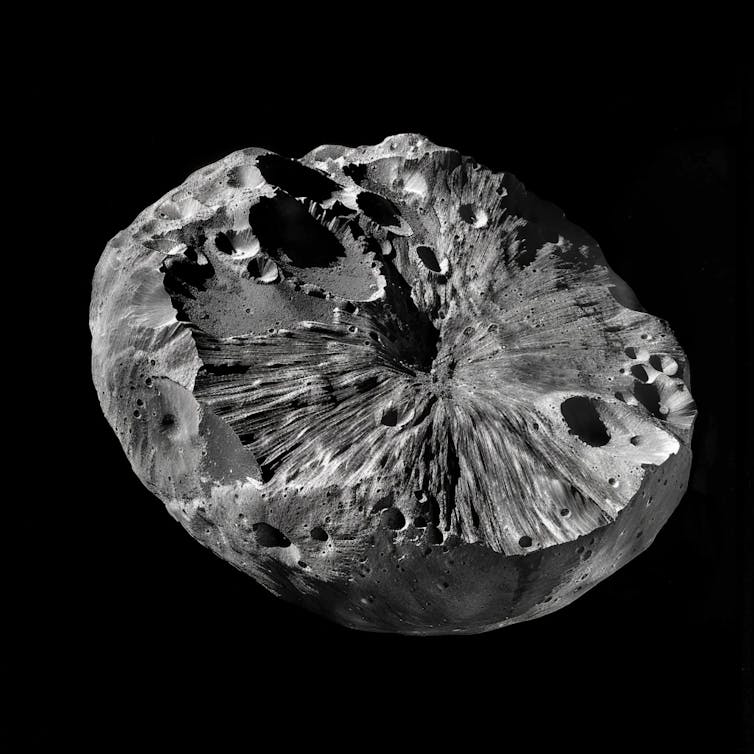

For example, a probe is currently heading to a never-before-seen metal asteroid, 16 Psyche. It’s projected to reach the asteroid in 2029. We can feed an AI tool a description from NASA to get a rough sense of what the asteroid might look like.

Midjourney image by T.J. Thomson

NASA currently works with artists to illustrate concepts we can’t see, but artists could also draw on AI to help create these renderings.

3. Brainstorming how to visualise difficult concepts

Where we might have once turned to Google Images or Pinterest boards for visual inspiration, AI can also help with suggestions on how to show difficult-to-visualise subject matter.

Take the Mariana Trench, for example. As one of the deepest places on Earth, few people have ever seen it firsthand. It’s also pitch black and artificial light wouldn’t allow you to see very far.

But ask AI for suggestions on how to visualise this spot and it provides a number of ideas, including taking a more familiar landmark, such as the Burj Khalifa, the world’s tallest structure, and placing a scaled model next to the trench to better allow audiences to appreciate its depth.

Or creating a layered illustration that shows the flora and fauna that live at each of the ocean’s five zones above the trench.

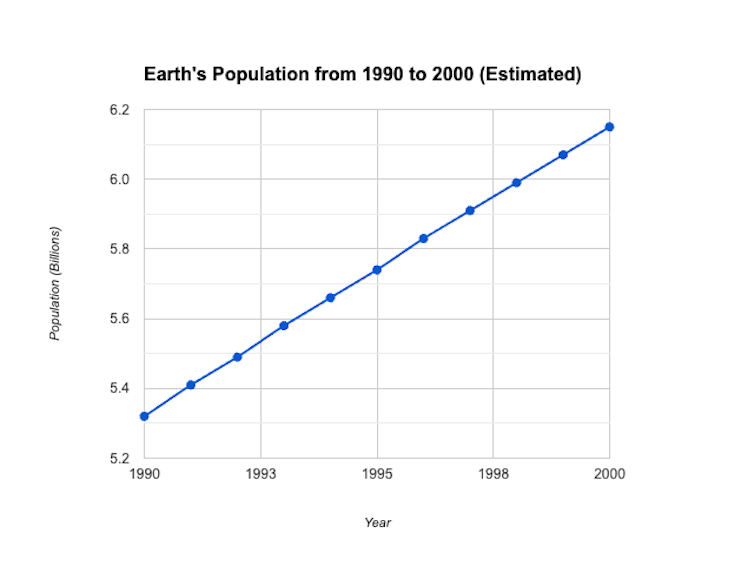

4. Visualising data

Depending on the tool, you can prompt AI with numbers, not just text.

For example, you might upload a spreadsheet to ChatGPT 4 and ask it to visualise the results. Or, if the data is already publicly available (such as Earth’s population over time), you might ask a chatbot to visualise it without even having to supply a spreadsheet.

Gemini image by T.J. Thomson

It’s a great way to speed up such tasks, as long as you keep in mind AI can “hallucinate”, or make things up, so you need to double check the accuracy of the results.

Read more:

Misinformation: how fact-checking journalism is evolving – and having a real impact on the world

5. Creating simple moving images

You can create a simple yet effective animation by uploading a photo to an AI tool like Runway and giving it an animation command, such as zooming in, zooming out or tracking from left to right. That’s what I’ve done with this historical photo preserved by the State Library of Western Australia.

T.J Thomson

Another way you can experiment with video is using Runway’s text-to-video feature to describe the scene you want to see and let it make a video for you. I used this description to create the following video:

Tracking shot from left to right of the snowy mountains of Nagano, Japan. Clouds hang low around the mountains and they are about 50m away.

T.J Thomas

6. Generating a colour palette or simple graphics

Maybe you’re creating a logo for your small business or helping a friend with the design of an event invitation. In these cases, having a consistent colour palette can help unify your design.

You can ask generative AI services like Midjourney or Gemini to create a colour palette for you based on the event or its vibe.

Midjourney image by T.J. Thomson

If you’re designing a website or poster and need some icons to represent certain parts of the message, you can turn to AI to generate them for you. This is true for both browser-based generators like Adobe Firefly, as well as desktop apps with built-in AI, like Adobe Illustrator.

Next time you’re interacting with a generative AI chatbot, ask it what it’s capable of. In addition to these six use cases, you might be surprised to know that generative AI can also write code, translate content, make music and describe images. This can be handy for writing alt-text descriptions and making the web more accessible for those with vision impairments.